Using multi-agent deep reinforcement learning for stock, crypto and options trading

Role

Industry

Duration

Problem statement

How might someone use deep reinforcement learning to execute profitable long and short stock trades in a pattern day trading application? I built this project to grow my deep reinforcement learning (DRL) chops and to build experience in the fintech space. My hypothesis, born out by industry research, was that DRL (in combination with other technologies) would be a strong foundation for quantitative securities trading.

Process

I started with research and discovered various DRL quant trading frameworks including ElegantRL, FinRL and others. Because this was a personal project, I didn't need to focus deeply on external use cases. I evaluated various frameworks and began prototyping.

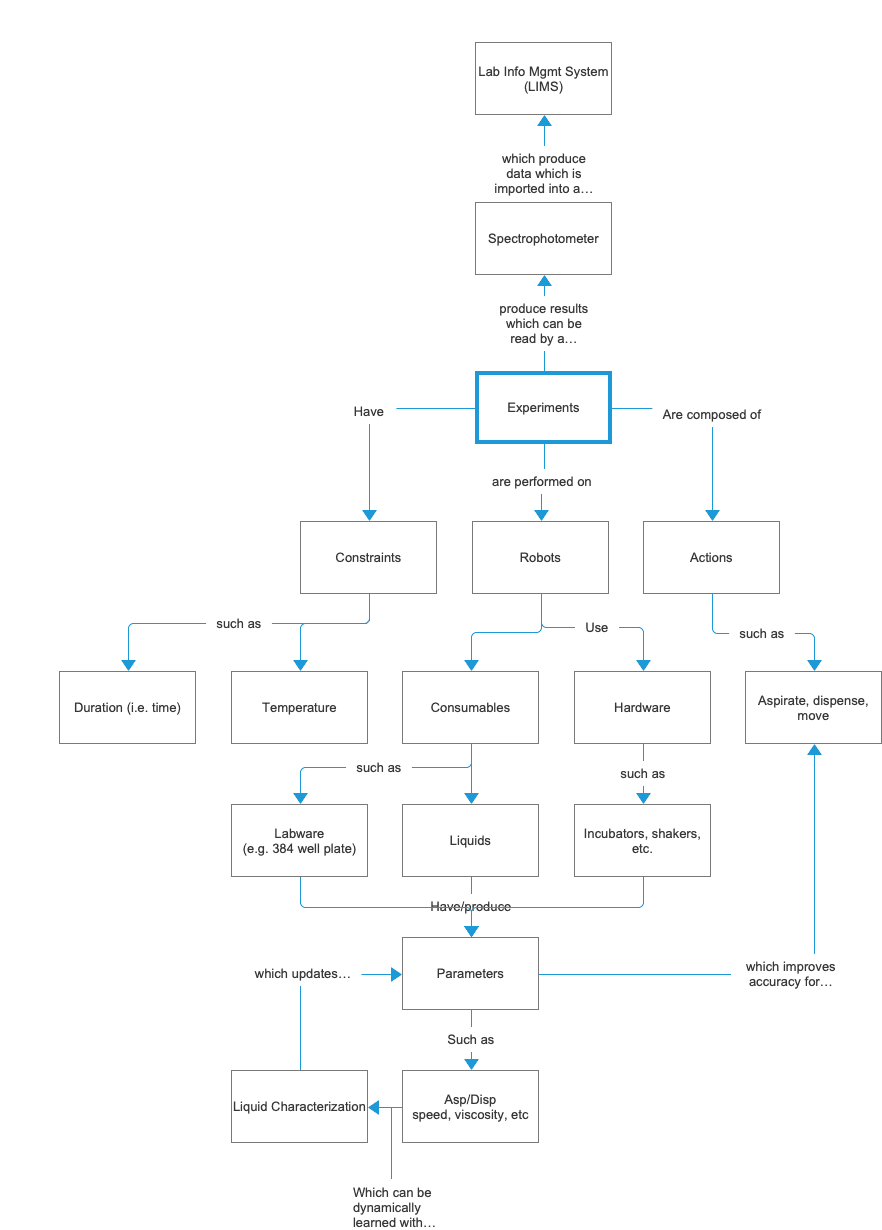

Architecture

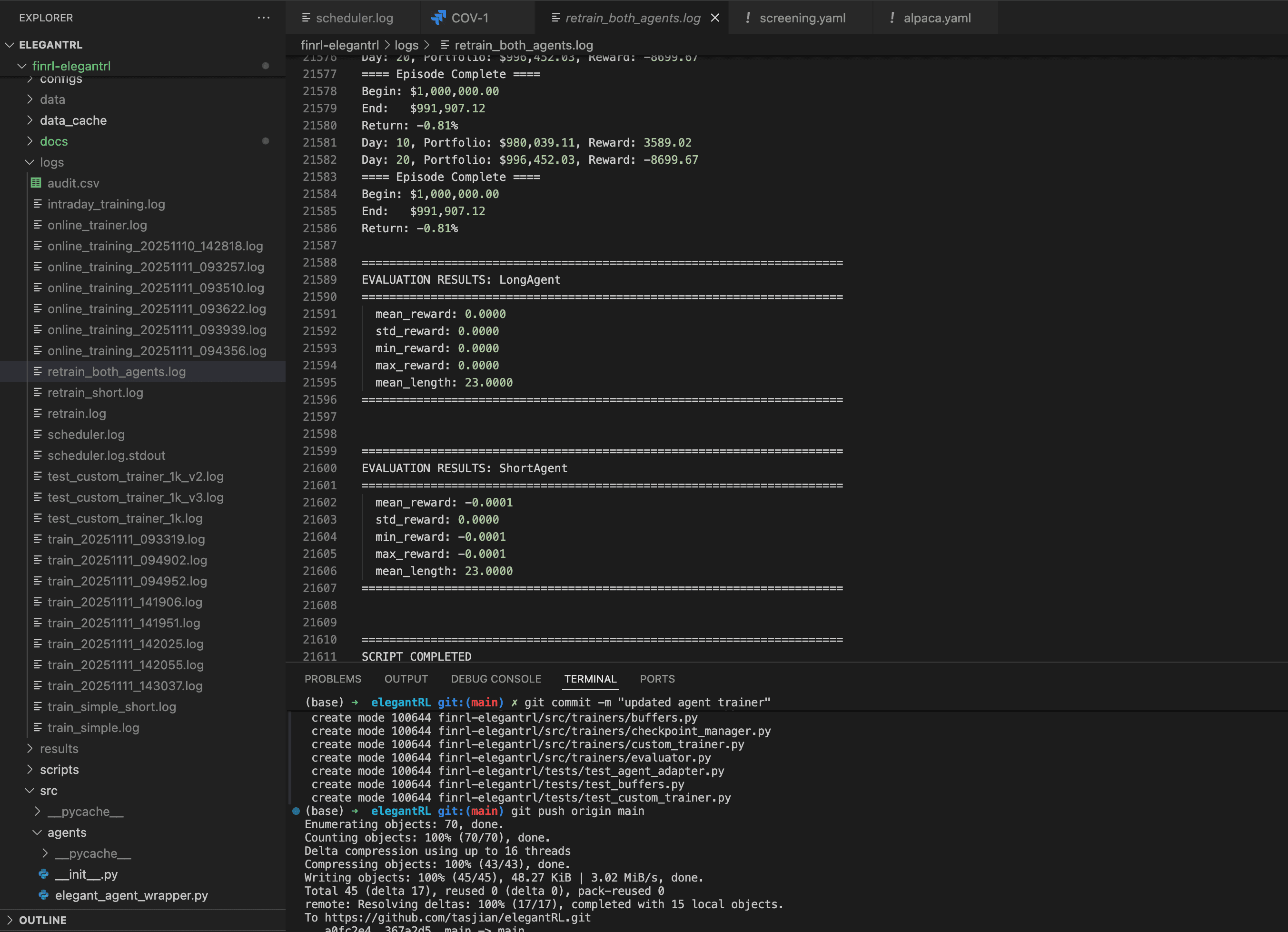

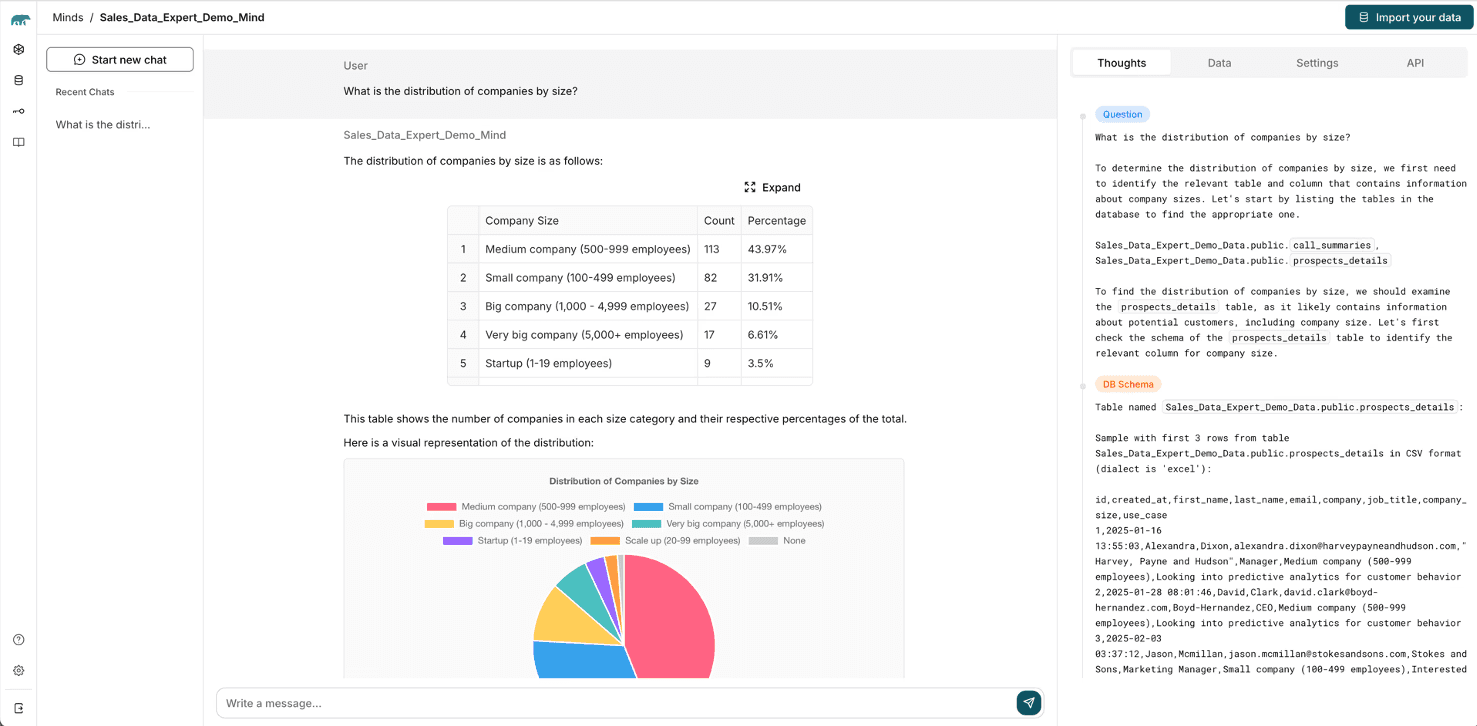

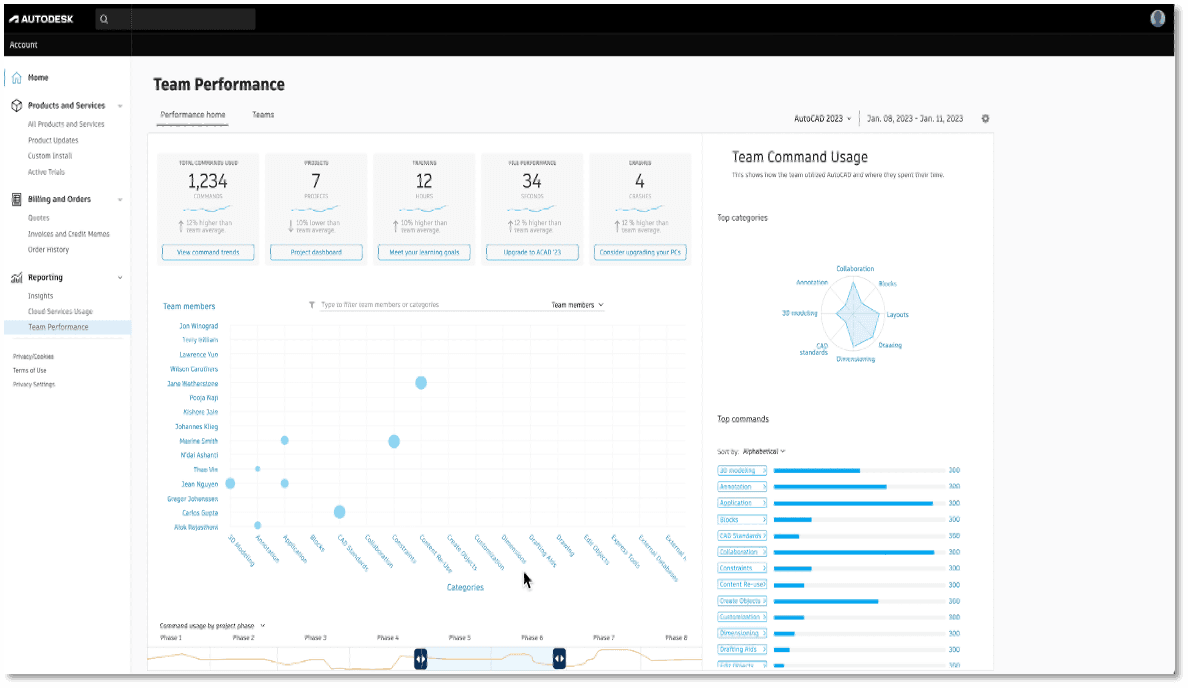

The prototype uses Alpaca (pictured) as the front end for the ML app. There was no custom front end for this project. The application interacted with Alpaca via API. Initial iterations used a locally running LLM (GPT5o nano, via Ollama) for sentiment analysis in stock selection. This was part of the weighting of decisions passed into the DRL agents. However, this was ineffective and was later removed. I wanted to include both long and short positions, so I used a multi-agent system (one for long and one for short). The long agent used a PPO reward strategy and the short agent used SAC. Both were trained on 50K steps of intraday stock data via EODHD data API.

Design strategy

Training: Training used historical intraday data for 10 stock tickers going back to 2023. It then used an incremental online training strategy every 60 min to retrain throughout the trading day. Initially, I used a rules-based, deterministic stock picker to reduce the entire stock universe to approximately 10 positions. But it turned out that it was actually easier to let the DRL agents pick the stocks and then optimize as they learned.

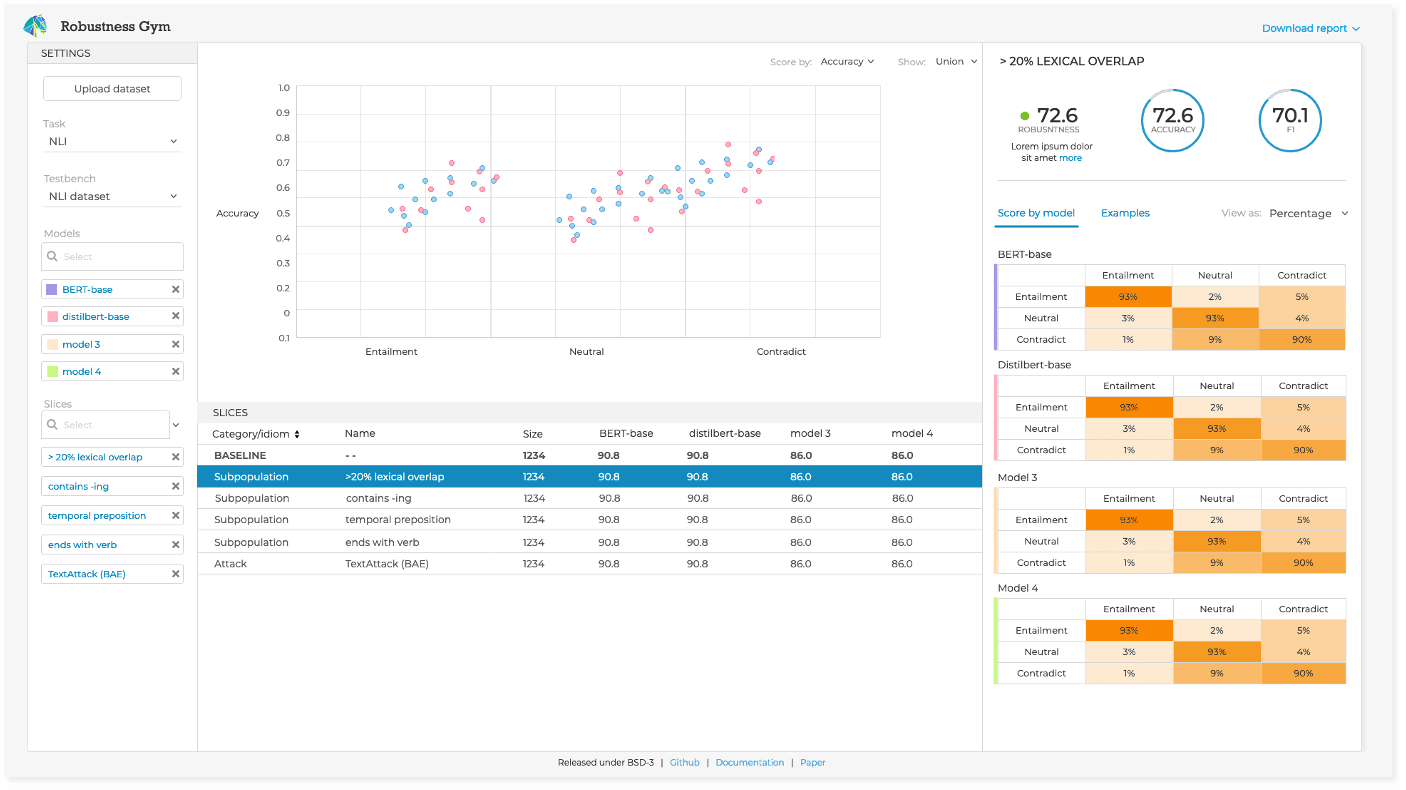

Tuning: There were a number of critical hyper parameters that impacted performance. Obviously this started with total training steps and learning rate but also included triggers for when to sell. Additionally, it was challenging to tune for limits vs market trades and within short vs long. For example, the system started by converting unprofitable longs into shorts prior to implementing the multi-agent strategy.

Results

Results were mixed. The application did really well at times and completely failed in other market conditions (I never used real money; it was always using paper-trading). Portfolio value would be the obvious metric for success, however, I was typically looking for day-over-day increase rather than a total number. Sharpe value was also a valuable metric as the project progressed.

Challenges

This project had MANY challenges:

Executing trades in Alpaca: There were all kinds of issues with liquidity and meeting the requirements of pattern day trading.

Acquiring data: Most premium data in the fintech space is extremely expensive. It was challenging to find and acquire cheap data to train the models.

Implementing the DRL agents: Building agents that could intelligently interact with each other, the stock universe and Alpaca was non-trivial. This required deep refactoring the architecture multiple times. This also was a performance bottleneck.